Kubernetes Cluster API With vSphere入门实践

在Kubernetes官方社区有个叫sig-cluster-lifecycle的特别兴趣小组,它有个著名的子项目叫「Kubeadm」,但今天我们的主角不是它,而是它——「Cluster-API」。

缘起:用k8s的方式创建k8s集群,让k8s as a service 成为可能

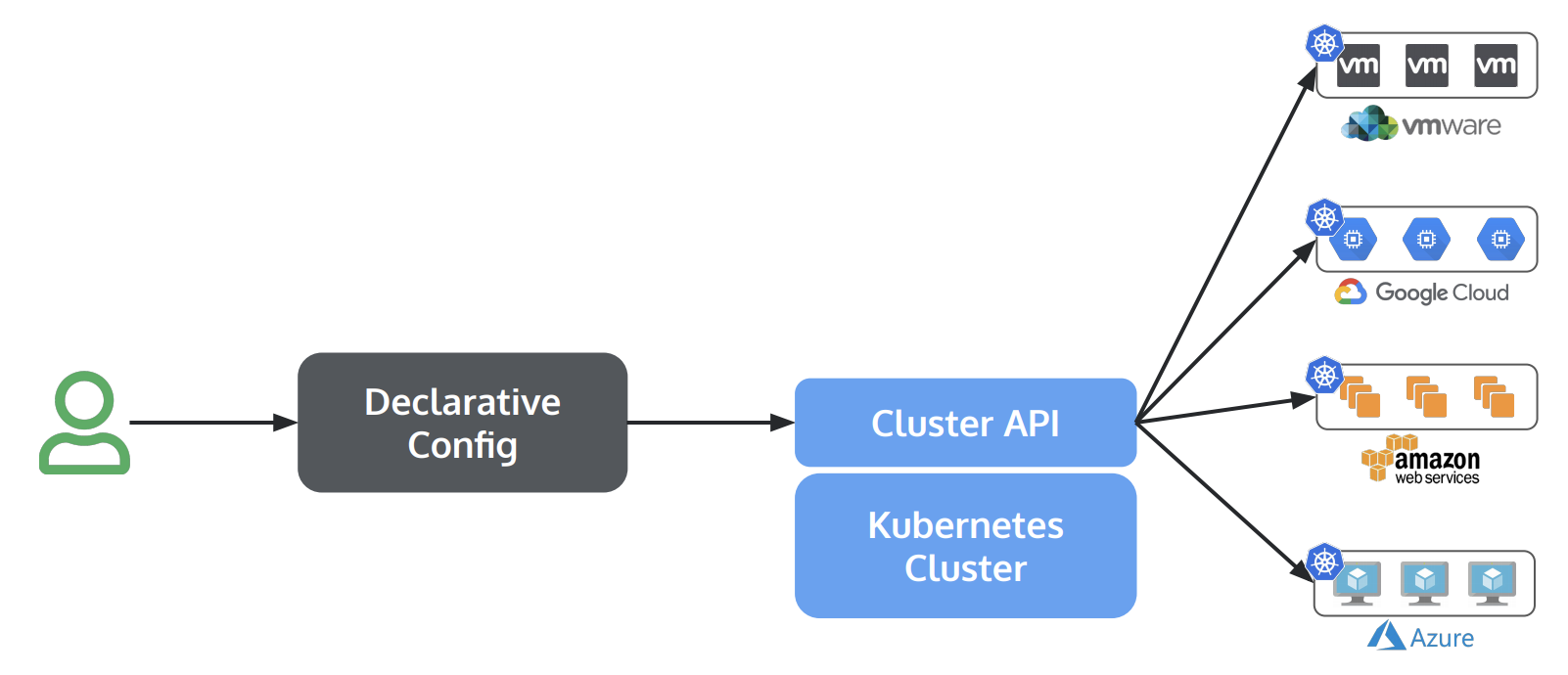

如果你跟我一样,创建Kubernetes集群已经变得如此频繁的操作,希望有个Kubernetes As a Service服务,方便自己也方便用户。那么用Kubernetes的方式创建并管理Kubernetes集群,一定会吸引你。Cluster-API项目就是为了这个目标而诞生的。

Cluster-API官方代码仓库在这里:https://github.com/kubernetes-sigs/cluster-api,第一个版本在2018年6月release,还可以看到当时的第一张架构图。

运行原理

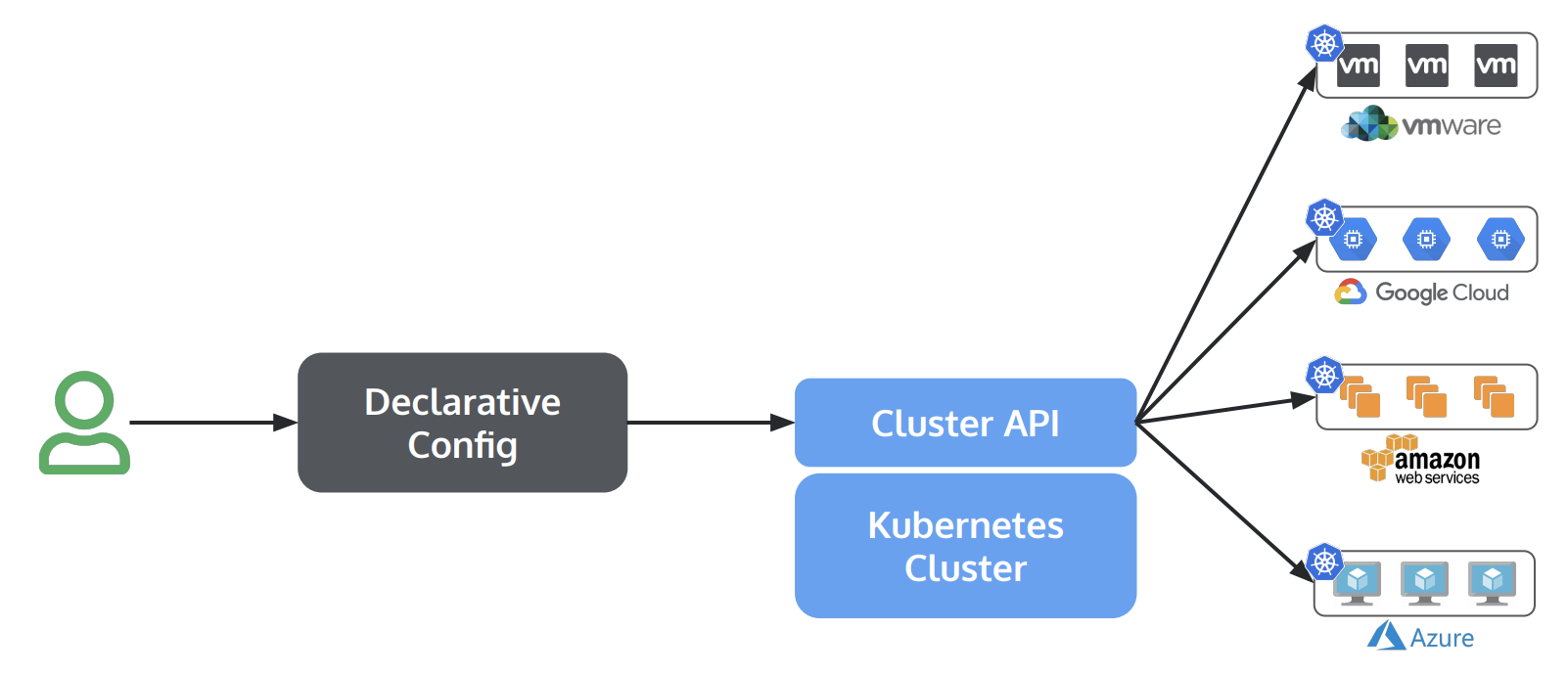

今天的目标是入门,所以不会详细说明每个组件的意义,只说逻辑,下图是一张简单逻辑图。用户只需像发布一个普通应用一样,创建声明式配置,通过Cluster-API和Management k8s集群,对接各种基础资源平台,就可以一键创建任意个k8s集群。

由上图可见,要利用Cluster-API最重要的就是有个强大而完备的Management Cluster,我叫它超级k8s集群,它必须具备三个能力:

- 具有识别一个k8s集群资源定义的能力

- 具有引导集群启动的能力

- 具有对接不同基础资源平台的能力

实践出真理

目前Cluster-API支持了众多国际主流公有云,还没看到国内公有云(估计内部已经对接,还没开源)。作为本地玩家,可供选择的有openstack、vSphere和docker。手边正好有搭好的vSphere环境,那就玩起来吧。

- 实践环境

- 已有一个普通k8s v1.16集群

- 已有一个vSphere v6.0环境

- 实践目标

- 通过已有的k8s集群配合vSphere,从零创建若干个虚拟机,并部署一套k8s集群可以直接使用。

- 操作步骤

- 让现有的k8s集群变身为超级集群

# 部署cluster api component CRD和相关Controller

# 具备识别一个k8s集群资源定义的能力

> kubectl apply -f https://github.com/kubernetes-sigs/cluster-api/releases/download/v0.2.8/cluster-api-components.yaml

# 结果为创建了1个namespace,4个CRD,1个Controller以及若干个权限角色资源

# 这4个CRD是个很有趣的设计,后面会简单说下

> kubectl get crd

clusters.cluster.x-k8s.io

machinedeployments.cluster.x-k8s.io

machines.cluster.x-k8s.io

machinesets.cluster.x-k8s.io

> kubectl get all -n capi-system

NAME READY STATUS RESTARTS AGE

pod/capi-controller-manager-5c995868f5-zmgjd 1/1 Running 0 10m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/capi-controller-manager 1/1 1 1 10m

NAME DESIRED CURRENT READY AGE

replicaset.apps/capi-controller-manager-5c995868f5 1 1 1 10m

# 部署kubeadm provider,用来创建集群核心组件

# 具备引导集群启动的能力

> kubectl apply -f https://github.com/kubernetes-sigs/cluster-api-bootstrap-provider-kubeadm/releases/download/v0.1.5/bootstrap-components.yaml

# 结果为创建了1个namespace,2个CRD,1个Service,1个Controller以及若干个权限角色资源

# 这4个CRD是个很有趣的设计,后面会简单说下

> kubectl get crd

kubeadmconfigs.bootstrap.cluster.x-k8s.io

kubeadmconfigtemplates.bootstrap.cluster.x-k8s.io

> kubectl get all -n cabpk-system

NAME READY STATUS RESTARTS AGE

pod/cabpk-controller-manager-c58d8596f-bzk8v 2/2 Running 0 10m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cabpk-controller-manager-metrics-service ClusterIP 10.103.33.48 <none> 8443/TCP 10m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cabpk-controller-manager 1/1 1 1 10m

NAME DESIRED CURRENT READY AGE

replicaset.apps/cabpk-controller-manager-c58d8596f 1 1 1 10m

# 安装vSphere infra provider,对接vSphere环境

# 具备管理vSphere环境各种资源的能力,便于创建虚机、磁盘、网络等

# 这里比较特殊,需要先创建一个secret用于连接vSphere环境,后面的yaml会用到

> cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Namespace

metadata:

labels:

control-plane: controller-manager

name: capv-system

---

apiVersion: v1

kind: Secret

metadata:

name: capv-manager-bootstrap-credentials

namespace: capv-system

type: Opaque

data:

username: "<your base64-encoded vSphere username >"

password: "<your base64-encoded vSphere password>"

EOF

# 发文的时候,已经有v0.5.4新版,据说有性能提升,大家自行斟酌食用

> kubectl apply -f https://github.com/kubernetes-sigs/cluster-api-provider-vsphere/releases/download/v0.5.3/infrastructure-components.yaml

# 结果为创建了1个namespace,3个CRD,1个Service,1个Controller以及若干个权限角色资源

> kubectl get crd

vsphereclusters.infrastructure.cluster.x-k8s.io

vspheremachines.infrastructure.cluster.x-k8s.io

vspheremachinetemplates.infrastructure.cluster.x-k8s.io

> kubectl get all -n capv-system

NAME READY STATUS RESTARTS AGE

pod/capv-controller-manager-869fcd89d7-96985 1/1 Running 0 10m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/capv-controller-manager-metrics-service ClusterIP 10.105.152.54 <none> 8443/TCP 10m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/capv-controller-manager 1/1 1 1 10m

NAME DESIRED CURRENT READY AGE

replicaset.apps/capv-controller-manager-869fcd89d7 1 1 1 10m

好了,现在你已经拥有「超级集群」了!

- 导入官方OVA,作为虚机的创建模板

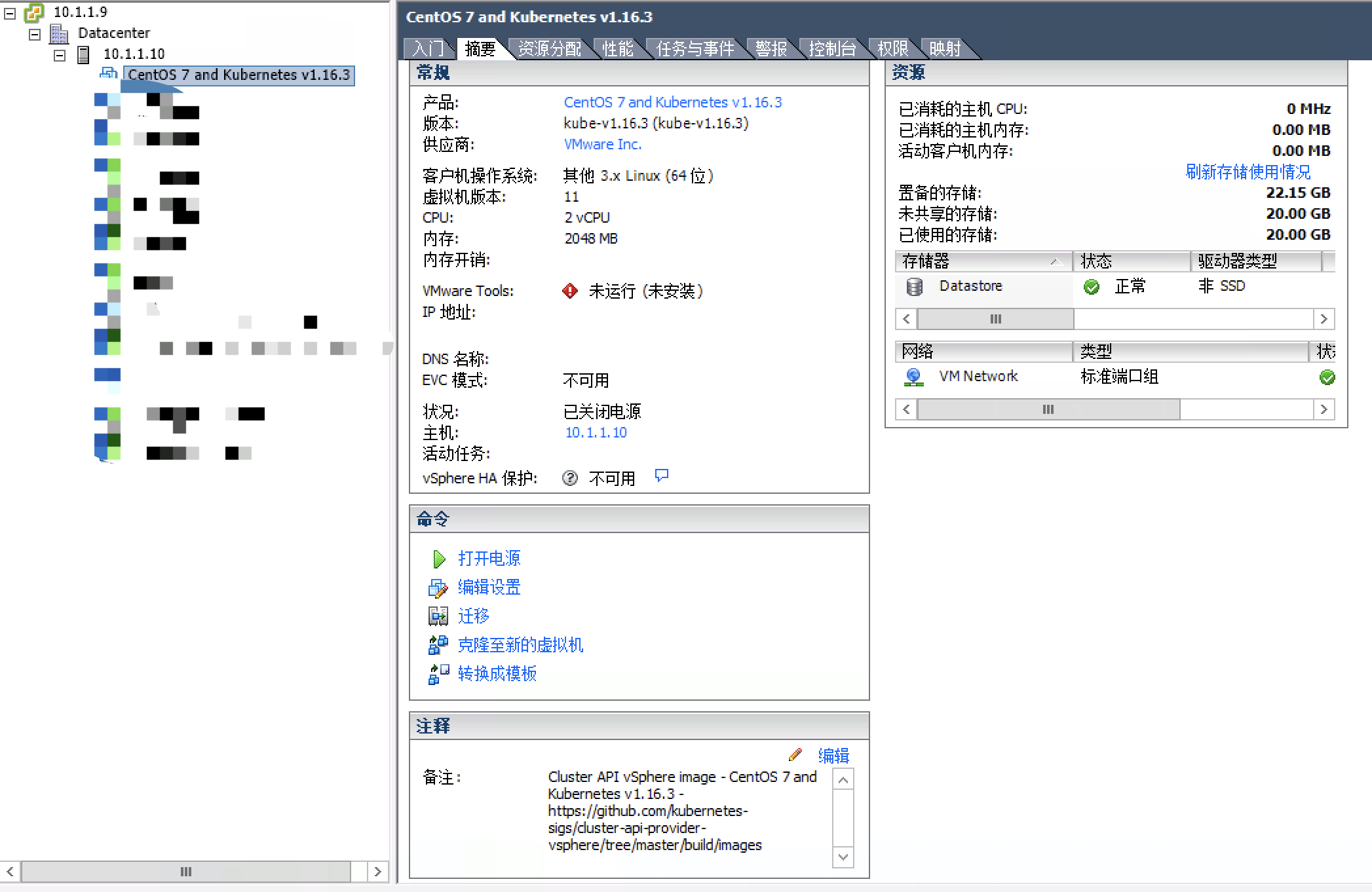

官方为vSphere提供了三种OVA,分别是基于Ubuntu、CentOS和Photon,我这边选取CentOS为例,大小为1.4G。

导入OVA方式很多,通过Windows上的vSphere客户端应该最简单的方式,我也尝试了浏览器端的基于flash的web client,装了对应插件,但是由于现在的浏览器禁止了很多flash的功能,未能成功。导入成功并转成虚机模板的样子如下图:

- 生成目标集群的声明式配置文件,为创建做最后准备

官方提供docker镜像可以直接生成创建集群所需的声明式配置文件,想要运行它,需要准备一个环境变量文件,我用的是这样的。

> cat <<EOF >envvars.txt # vCenter config/credentials export VSPHERE_SERVER='10.1.1.9' # (required) The vCenter server IP or FQDN export VSPHERE_USERNAME='xxx' # (required) The username used to access the remote vSphere endpoint export VSPHERE_PASSWORD='yyy' # (required) The password used to access the remote vSphere endpoint # vSphere deployment configs export VSPHERE_DATACENTER='Datacenter' # (required) The vSphere datacenter to deploy the management cluster on export VSPHERE_DATASTORE='datastore01' # (required) The vSphere datastore to deploy the management cluster on export VSPHERE_NETWORK='VM Network' # (required) The VM network to deploy the management cluster on export VSPHERE_RESOURCE_POOL='*/Resources' # (required) The vSphere resource pool for your VMs export VSPHERE_FOLDER='/Datacenter/vm' # (optional) The VM folder for your VMs, defaults to the root vSphere folder if not set. export VSPHERE_TEMPLATE='CentOS 7 and Kubernetes v1.16.3' # (required) The VM template to use for your management cluster. export VSPHERE_DISK_GIB='50' # (optional) The VM Disk size in GB, defaults to 20 if not set export VSPHERE_NUM_CPUS='2' # (optional) The # of CPUs for control plane nodes in your management cluster, defaults to 2 if not set export VSPHERE_MEM_MIB='2048' # (optional) The memory (in MiB) for control plane nodes in your management cluster, defaults to 2048 if not set export SSH_AUTHORIZED_KEY='ssh-rsa AAAA...' # (optional) The public ssh authorized key on all machines in this cluster # Kubernetes configs export KUBERNETES_VERSION='1.16.3' # (optional) The Kubernetes version to use, defaults to 1.16.2 export SERVICE_CIDR='100.64.0.0/13' # (optional) The service CIDR of the management cluster, defaults to "100.64.0.0/13" export CLUSTER_CIDR='100.96.0.0/11' # (optional) The cluster CIDR of the management cluster, defaults to "100.96.0.0/11" export SERVICE_DOMAIN='cluster.local' # (optional) The k8s service domain of the management cluster, defaults to "cluster.local" EOF

接下来就可以运行docker镜像,生成yaml了:

> cat docker-run-gen-work-cluster-yaml.sh

docker run --rm \

# 容器内挂载路径是有规范的

-v "$(pwd)":/out \

-v "$(pwd)/env-vs.sh":/envvars.txt:ro \

gcr.io/cluster-api-provider-vsphere/release/manifests:v0.5.3 \

# 这个是目标集群名

-c workload-cluster-1

# 执行完成后,可以看到生成了如下文件:

# 如果大家对细节感兴趣,可以参照官方文档:https://github.com/kubernetes-sigs/cluster-api-provider-vsphere/blob/master/docs/getting_started.md#managing-workload-clusters-using-the-management-cluster

> tree ./out/

./out/

└── workload-cluster-1

├── addons.yaml # CNI网络插件Calico部署配置

├── cluster.yaml # vSphere基础资源环境配置

├── controlplane.yaml # 定义目标集群的管理节点应用使用哪些vSphere资源和启动后的配置,如主机名等

├── machinedeployment.yaml # 定义目标集群的计算节点应用使用哪些vSphere资源和启动后的配置,如主机名等

└── provider-components.yaml # 仔细看其实就是上文中的kubeadm provider的配置,因此可以不用

- 一条命令部署一个集群

# 部署所有生成的yaml文件 > kubectl apply -f ./out/

- 大功告成,看效果

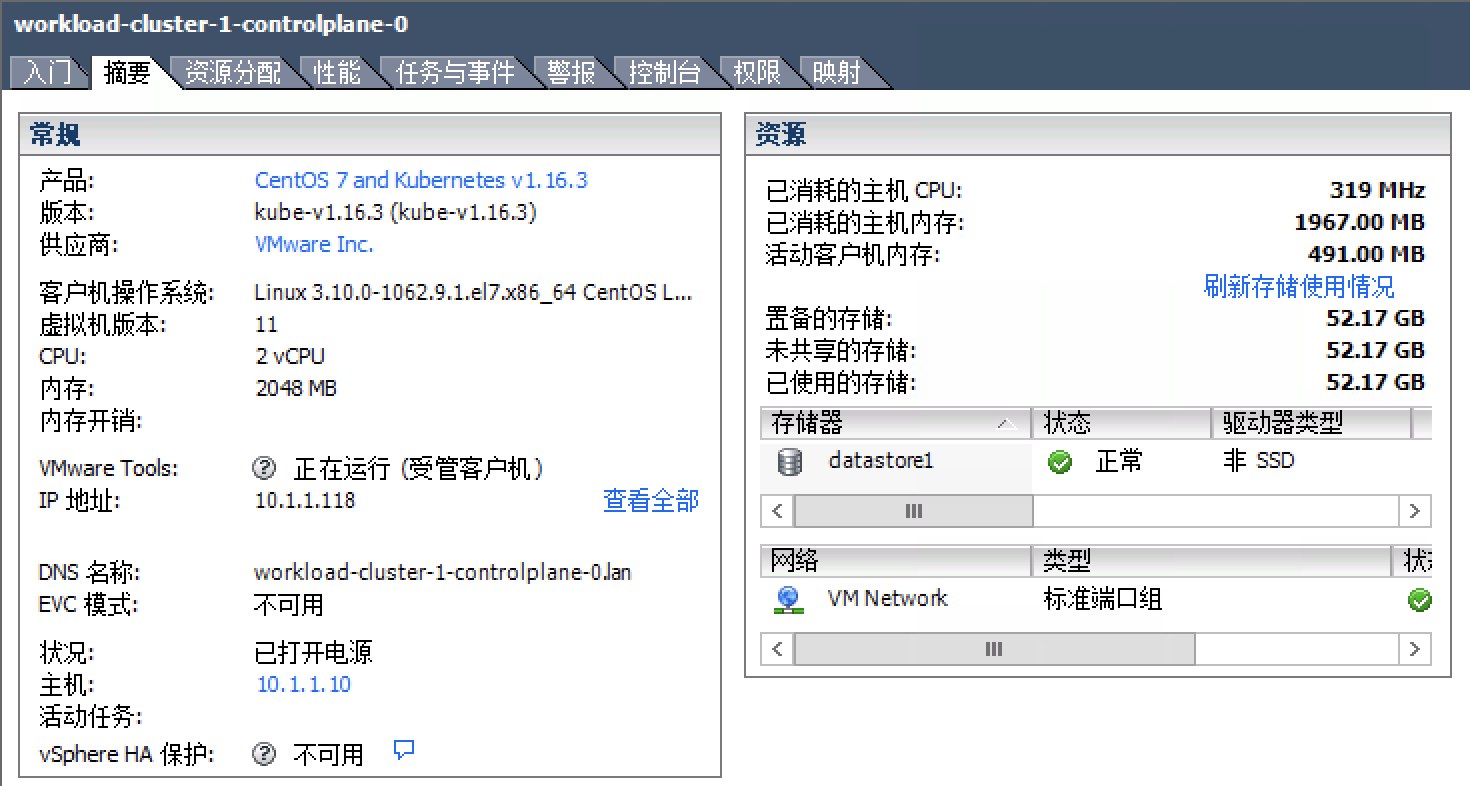

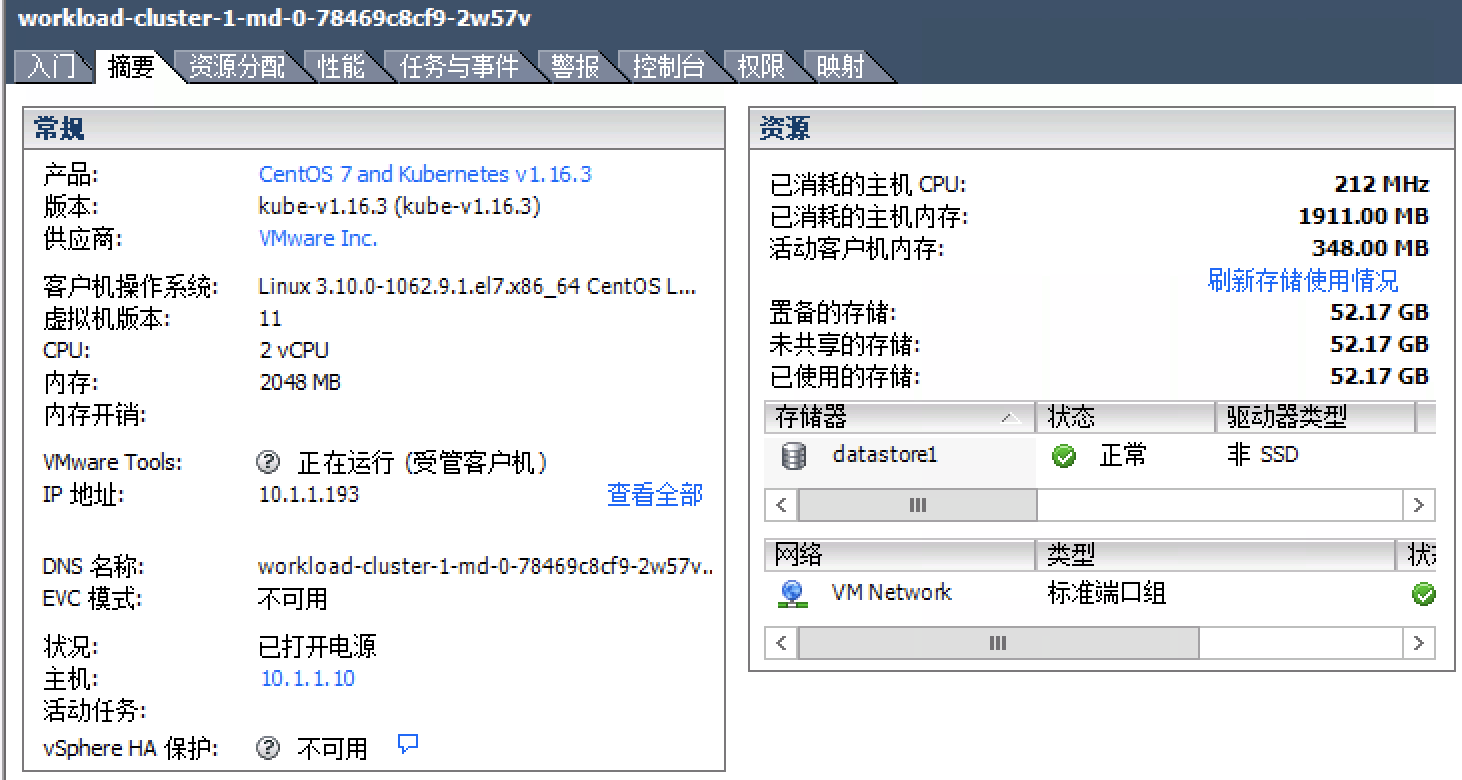

我这边大概是过了10分钟,能看两台虚机被创建出来了,过了20分钟,可以连上集群了(估计大半时间在拉镜像了)。

这是管理节点:

这是工作节点:

想要通过kubectl访问目标集群,可以先导出credentials作为kubeconfig:

# export kubeconfig from secret in management cluster

# 从「超级集群」中导出credentials

> kubectl get secret workload-cluster-1-kubeconfig -o=jsonpath='{.data.value}' | \

{ base64 -d 2>/dev/null || base64 -D; } >vsphere-kubeconfig

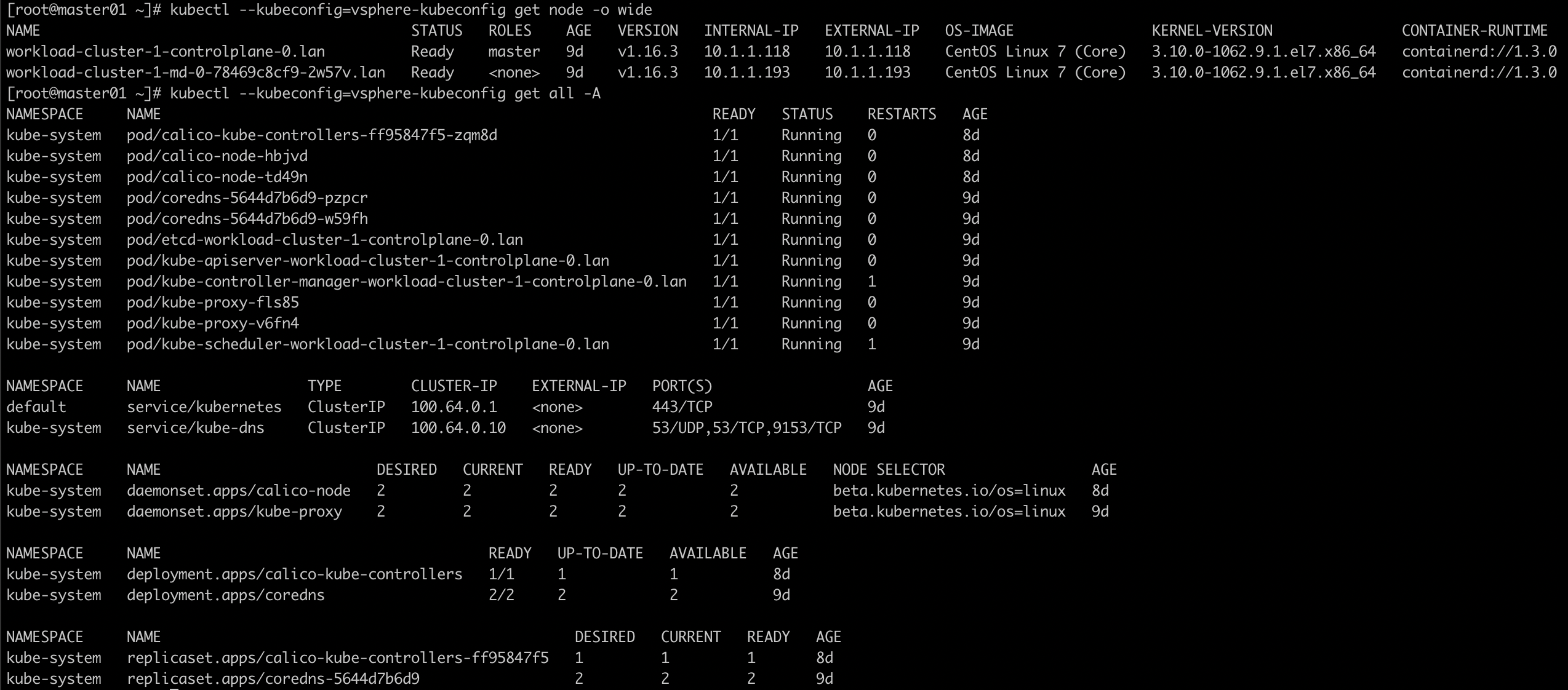

连上看下,感觉很酷?:

这样,用k8s的方式创建k8s,make k8s-as-a-service happen!

Cluster-API 核心CRD设计思路

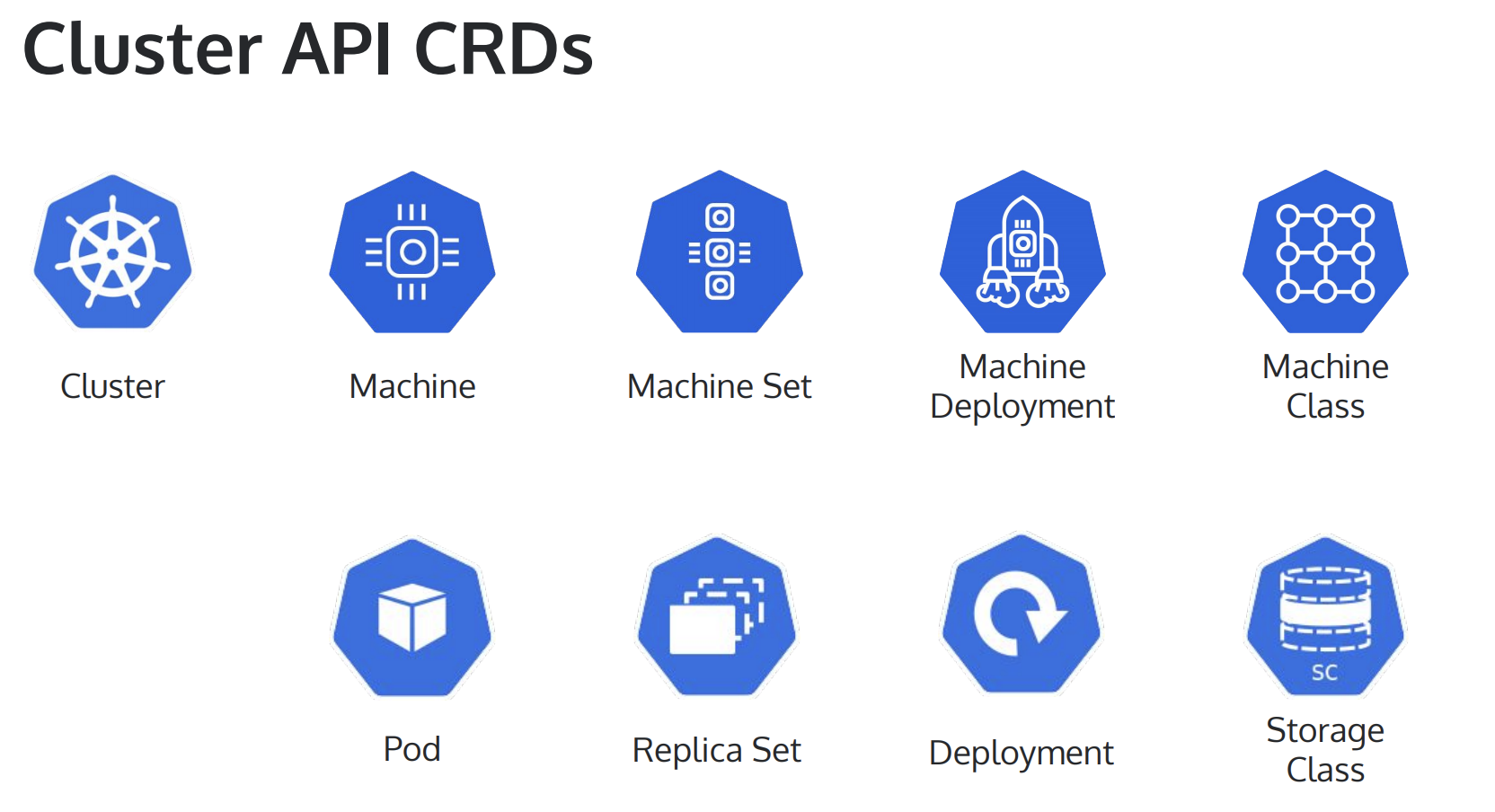

还记得上文中按着不说的4个核心CRD么:

# 这几个个CRD是个很有趣的设计 > kubectl get crd clusters.cluster.x-k8s.io machinedeployments.cluster.x-k8s.io machines.cluster.x-k8s.io machinesets.cluster.x-k8s.io # plus one vspheremachinetemplates.infrastructure.cluster.x-k8s.io

这几个自定义资源乍一看很难理解,其实可以跟常用的k8s资源作类比:

同一列的上下资源概念是相似的(上图的machine class也就是machine template)。

创建你自己的provider

每个企业都有自己的基础资源环境,你可以基于Cluster-API让你的k8s起飞,社区的provider都可以参考借鉴。

后记

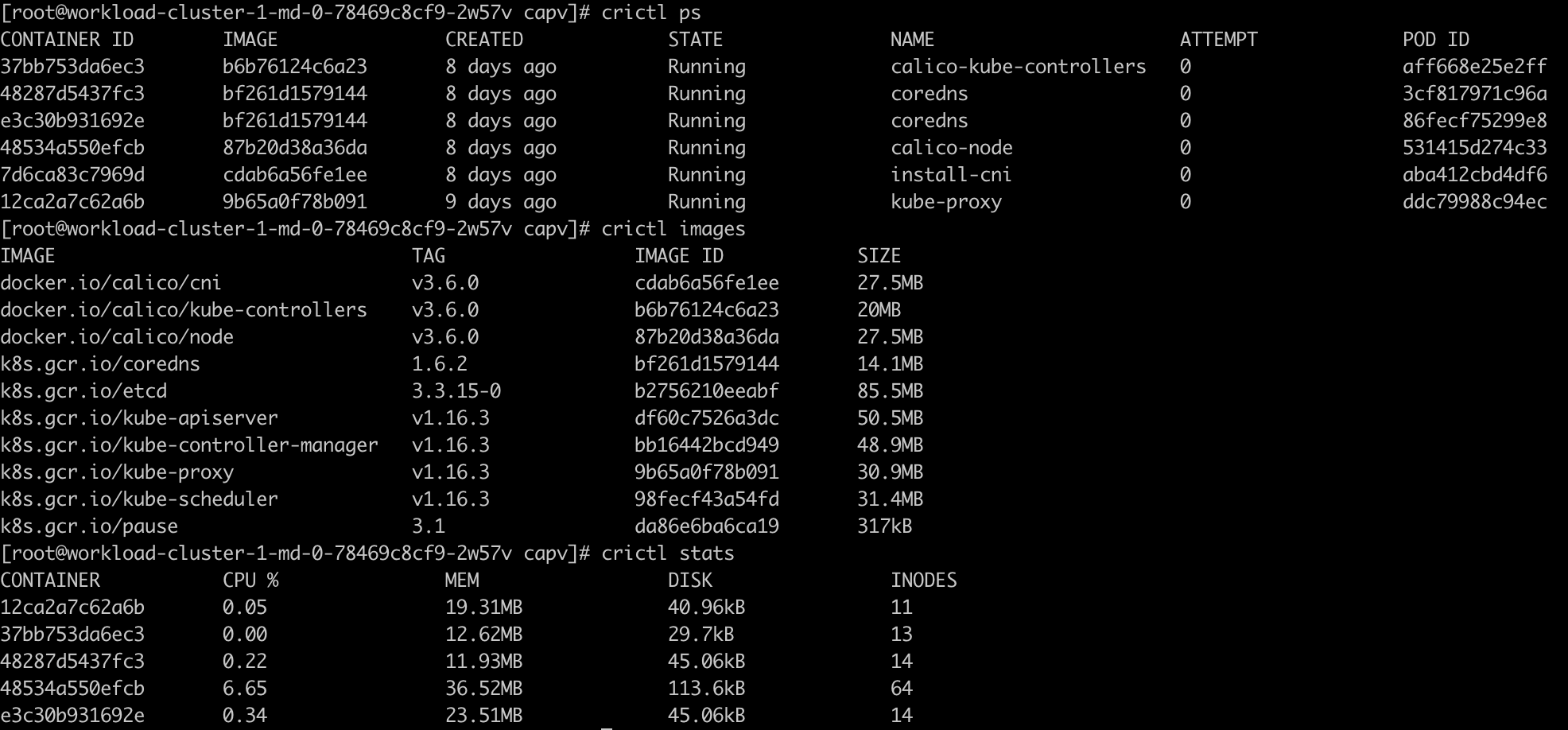

- containerd 怎么用——crictl!

当我ssh到目标集群的节点上去想去看下具体情况,发现docker命令不好使,原来压根就没装docker-engine,用的是containerd来管理容器实例。那我该怎么「docker ps」呢?答案是:crictl ps。默认就安装了crictl命令行工具,所以还是很方便的,crictl选项特别多,可玩性不比docker差。

- 计划录个视频,让大家身临其境感受一把

这是一篇技术长文,所以我觉得录个视频是不是更容易让大家接受?好比是做开源软件的开箱评测。想要的请在评论里+1

参考链接

- https://github.com/kubernetes-sigs/cluster-api-provider-vsphere

- https://cluster-api.sigs.k8s.io/user/concepts.html

- https://kccna18.sched.com/event/Grcl/intro-cluster-lifecycle-sig-robert-bailey-google-timothy-st-clair-heptio

- https://itnext.io/kubernetes-cluster-creation-on-baremetal-host-using-cluster-api-1c2373230a17

I absolutely love your blog.. Excellent colors

& theme. Did you build this web site yourself? Please reply back as I’m planning to create my own personal

website and want to learn where you got this from or what the

theme is called. Many thanks! Ahaa, its pleasant conversation concerning this post at this place at this webpage, I have read all that, so now me also commenting at this place.

Woah! I’m really loving the template/theme of this blog.

It’s simple, yet effective. A lot of times it’s challenging to get that “perfect balance”

between user friendliness and appearance. I must say you’ve done a superb job with

this. Also, the blog loads super quick for me on Internet explorer.

Outstanding Blog! http://foxnews.co.uk/

Hey there! Someone in my Facebook group shared this website

with us so I came to give it a look. I’m definitely loving the information. I’m bookmarking and will be tweeting this to my followers!

Great blog and amazing design.

Thx for sharing. I plan to translate the blog into English for better understanding, does it make sense for u guys?

Amazing! Its really amazing piece of writing, I have got much clear idea concerning from this

piece of writing.