从源码打造云原生时代的「Linux」——Kubernetes

从源码级别定制自己的Linux可能有点难,那要不要换定制「云原生时代的Linux系统」——Kubernetes?这篇文章给大家演示如何从源码编译出属于你自己的Kubernetes。

特别推荐大家阅读郑东旭大佬的《Kubernetes源码剖析》这本书,帮助你更完整地了解Kubernetes。

目录

官方教程入口

作为目前云计算领域活跃度最高的项目——Kubernetes在其GitHub上已经提供了如何编译Kubernetes的方法,README中的第一句话是:

Building Kubernetes is easy if you take advantage of the containerized build environment.

意思是「利用好容器化的编译环境,编译出自己的Kubernetes是一件简单的事情」,那今天我们就来演示两种编译方案——原生编译(非容器化)和容器化编译(其实官方还第三种编译方式,是基于Bazel,感兴趣的童鞋可以去了解),并对比它们之间的差异,看看这句话是否合理。

环境准备

- 实验环境和操作系统:Vagrant+Ubuntu 20.04(ubuntu/focal64)

作为一枚可能有点洁癖的Mac玩家,我更喜欢起一个干净的虚拟机,在里面鼓捣新的玩意儿,这样既不会「污染」Mac系统本身,也可以从零开始经历搭建环境的过程。

另外特别提示,本次我使用的Vagrant虚拟机的配置为2C8G,官方提到如果内存小于8G,容易导致编译失败。 - 安装软件

- gvm,随时切换不同版本的golang环境

gvm全称为「Golang Version Manager」,顾名思义,它是用来管理多个不同版本的Golang环境的,对于需要在不同版本之间进行开发测试的同学来说,绝对是神器(还记得最早接触的XXvm工具就是nvm,不禁感慨nodejs那个版本号啊~)。

gvm默认情况下是从源代码开始编译安装go环境,因此需要安装一堆gcc相关工具,没有相关需求的同学建议选择直接通过二进制安装。下面简单演示相关安装过程: - git,随时切换不同版本的Kubernetes源代码

获取Kubernetes源代码的方式很多,如果你跟我一样会使用很多版本的Kubernetes代码,推荐使用go get + git,git工具一般主流Linux系统都是自带的,无需安装。下面简单演示获取Kubernetes源代码过程: - Docker,管理编译过程所依赖的环境

把所有依赖打成一个docker image是在开放测试过程中常用的手段,也是特别合适的docker使用场景。Kubernetes的编译环境也是这么做的。Docker的安装非常简单,我一般使用一条命令搞定:

- gvm,随时切换不同版本的golang环境

原生编译

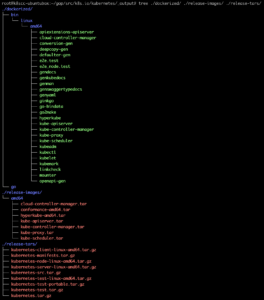

「原生编译」的含义就是通过make命令,直接编译出Kubernetes相关组件的二进制文件,其主要依赖的就是Kubernetes源码根目录下的Makefile文件,而它事实上指向了build/root/Makefile这个文件,建议大家可以通读这个Makefile,通过第42行可以看到最终的二进制文件会放在_output/bin/。

编译所有Kubernetes组件

下面简单演示了整个编译过程:

> cd /root/gop/src/k8s.io/kubernetes/

> make # or `make all`

+++ [0716 01:20:49] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/deepcopy-gen

+++ [0716 01:20:56] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/defaulter-gen

+++ [0716 01:21:01] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/conversion-gen

+++ [0716 01:21:09] Building go targets for linux/amd64:

./vendor/k8s.io/kube-openapi/cmd/openapi-gen

+++ [0716 01:21:20] Building go targets for linux/amd64:

./vendor/github.com/jteeuwen/go-bindata/go-bindata

+++ [0716 01:31:46] Building go targets for linux/amd64:

cmd/kube-proxy

cmd/kube-apiserver

cmd/kube-controller-manager

cmd/cloud-controller-manager

cmd/kubelet

cmd/kubeadm

cmd/hyperkube

cmd/kube-scheduler

vendor/k8s.io/apiextensions-apiserver

cluster/gce/gci/mounter

cmd/kubectl

cmd/gendocs

cmd/genkubedocs

cmd/genman

cmd/genyaml

cmd/genswaggertypedocs

cmd/linkcheck

vendor/github.com/onsi/ginkgo/ginkgo

test/e2e/e2e.test

cmd/kubemark

vendor/github.com/onsi/ginkgo/ginkgo

test/e2e_node/e2e_node.test

# list all the generated binary files

> ls -lh ./_output/bin

total 1.9G

-rwxr-xr-x 1 root root 41M Jul 16 01:35 apiextensions-apiserver

-rwxr-xr-x 1 root root 96M Jul 16 01:35 cloud-controller-manager

-rwxr-xr-x 1 root root 6.5M Jul 16 01:21 conversion-gen

-rwxr-xr-x 1 root root 6.5M Jul 16 01:20 deepcopy-gen

-rwxr-xr-x 1 root root 6.5M Jul 16 01:20 defaulter-gen

-rwxr-xr-x 1 root root 132M Jul 16 01:35 e2e.test

-rwxr-xr-x 1 root root 184M Jul 16 01:35 e2e_node.test

-rwxr-xr-x 1 root root 41M Jul 16 01:35 gendocs

-rwxr-xr-x 1 root root 198M Jul 16 01:35 genkubedocs

-rwxr-xr-x 1 root root 205M Jul 16 01:35 genman

-rwxr-xr-x 1 root root 3.9M Jul 16 01:35 genswaggertypedocs

-rwxr-xr-x 1 root root 41M Jul 16 01:35 genyaml

-rwxr-xr-x 1 root root 8.4M Jul 16 01:35 ginkgo

-rwxr-xr-x 1 root root 2.0M Jul 16 01:21 go-bindata

-rwxr-xr-x 1 root root 4.5M Jul 16 01:20 go2make

-rwxr-xr-x 1 root root 202M Jul 16 01:35 hyperkube

-rwxr-xr-x 1 root root 160M Jul 16 01:35 kube-apiserver

-rwxr-xr-x 1 root root 110M Jul 16 01:35 kube-controller-manager

-rwxr-xr-x 1 root root 35M Jul 16 01:35 kube-proxy

-rwxr-xr-x 1 root root 38M Jul 16 01:35 kube-scheduler

-rwxr-xr-x 1 root root 38M Jul 16 01:35 kubeadm

-rwxr-xr-x 1 root root 42M Jul 16 01:35 kubectl

-rwxr-xr-x 1 root root 123M Jul 16 01:35 kubelet

-rwxr-xr-x 1 root root 119M Jul 16 01:35 kubemark

-rwxr-xr-x 1 root root 5.3M Jul 16 01:35 linkcheck

-rwxr-xr-x 1 root root 1.6M Jul 16 01:35 mounter

-rwxr-xr-x 1 root root 12M Jul 16 01:21 openapi-gen

可以一共编译生成了近30多个二进制文件,可以看到除了kube开头的多个核心组件被编译出来以外,还有很多其他的二进制文件,有些是用来做测试的,有些是用来生成代码的,还有一些是用来生成文档的,感兴趣的可以去看《Kubernetes源码剖析》这本书,里面有非常详细的说明。

这里只讲一个我比较熟悉的「副产品」——deepcopy。我在《Kubernetes CRD&Controller入门实践》这篇实践文章中也提到了这个生成工具,它的作用就是为自己创建的Kubernetes CRD资源类型,生成深拷贝的方法,以便在实现对应Controller时进行相关操作。类似这样的工具有很多,为了便于开发者使用,Kubernetes官方提供给了一个集大成者的工具——Code Generator。

单独编译kubectl

大多数情况下我们可能只想定制某一个Kubernetes组件,而不是所有组件,如kubectl这个命令行工具,我们可以通过make WHAT=cmd/组件名称命令来指定编译某一个组件:

> make WHAT=cmd/kubectl

+++ [0714 14:13:00] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/deepcopy-gen

+++ [0714 14:13:07] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/defaulter-gen

+++ [0714 14:13:12] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/conversion-gen

+++ [0714 14:13:21] Building go targets for linux/amd64:

./vendor/k8s.io/kube-openapi/cmd/openapi-gen

+++ [0714 14:13:29] Building go targets for linux/amd64:

./vendor/github.com/jteeuwen/go-bindata/go-bindata

+++ [0714 14:13:31] Building go targets for linux/amd64:

cmd/kubectl

> root@k8scc-ubuntubox:~/gop/src/k8s.io/kubernetes/_output/bin# ll

total 79876

drwxr-xr-x 2 root root 4096 Jul 14 14:14 ./

drwxr-xr-x 3 root root 4096 Jul 14 14:13 ../

-rwxr-xr-x 1 root root 6775744 Jul 14 14:13 conversion-gen*

-rwxr-xr-x 1 root root 6771552 Jul 14 14:13 deepcopy-gen*

-rwxr-xr-x 1 root root 6742912 Jul 14 14:13 defaulter-gen*

-rwxr-xr-x 1 root root 2059136 Jul 14 14:13 go-bindata*

-rwxr-xr-x 1 root root 4713463 Jul 14 14:13 go2make*

-rwxr-xr-x 1 root root 43053888 Jul 14 14:14 kubectl*

-rwxr-xr-x 1 root root 11650592 Jul 14 14:13 openapi-gen*

root@k8scc-ubuntubox:~/gop/src/k8s.io/kubernetes/_output/bin# ./kubectl

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner):

create Create a resource from a file or from stdin.

expose Take a replication controller, service, deployment or pod and expose it as a new Kubernetes Service

run Run a particular image on the cluster

set Set specific features on objects

Basic Commands (Intermediate):

...

可以看到除了一些必要的副产物外,的确只有kubectl一个组件被编译出来。

自定义kubectl代码后重新编译

既然已经掌握了单独编译某个组件的方法,不妨试试自定义改造一下,下面演示了改造kubectl源码,进行编译后,验证改造效果

> cd /root/gop/src/k8s.io/kubernetes/pkg/kubectl/cmd > vim cmd.go # go to line 411 # add your own code into help text > cd /root/gop/src/k8s.io/kubernetes/ > make WHAT=cmd/kubectl # wait for the compilation done > cd /root/gop/src/k8s.io/kubernetes/_output/bin # execute the target binary > ./kubectl kubectl controls the Kubernetes cluster manager. ### new code Customized by David, you can find me here: https://davidlovezoe.club. ### new code Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/ Basic Commands (Beginner): create Create a resource from a file or from stdin. expose Take a replication controller, service, deployment or pod and expose it as a new Kubernetes Service run Run a particular image on the cluster set Set specific features on objects Basic Commands (Intermediate): ...

容器环境编译

终于来到我们期待已久的容器编译方案了。Kubernetes官方提供了相关入口,也是通过make命令启动相关编译任务:make quick-release和make release。前者是只构建对应当前所在系统的目标文件,意思我是在Linux上编译的,只构建出能在Linux平台上运行的目标文件,并省略执行相关单元测试。后者就是构建出3大平台的目标文件,包括macOS、Linux和Windows,并执行单元测试。

注意点:

- 事先安装好Docker并设置好拉取镜像proxy,否则可能无法拉取在k8s.gcr.io仓库的官方镜像。下面是配置proxy的简单方法:

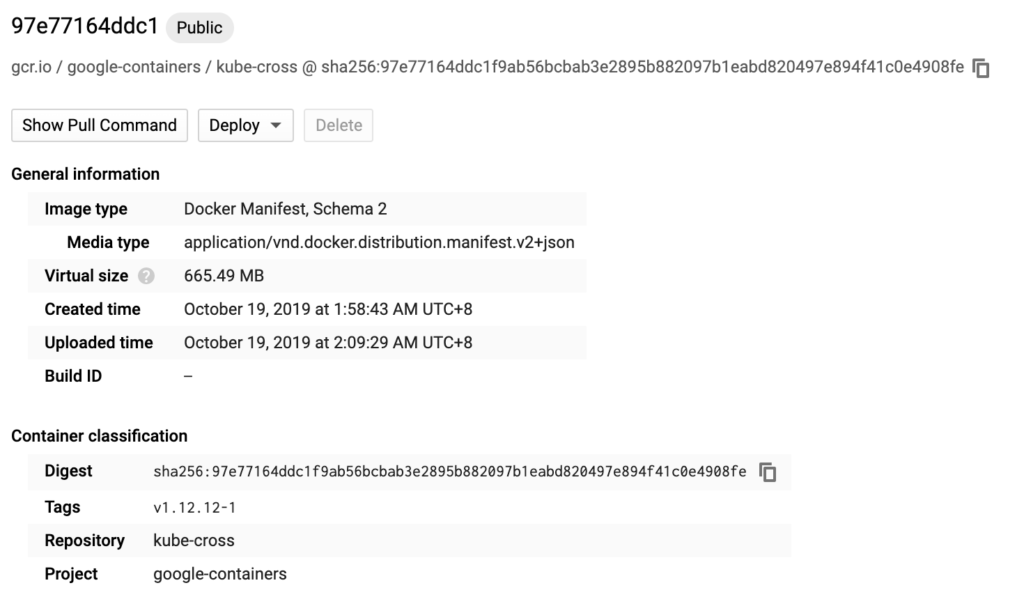

其中编译依赖的基础镜像k8s.gcr.io/kube-cross这个镜像特别大,虽然官方显示只有670MB左右(如下图):

但是实际上拉取下来近2GB。我的建议是事先拉取好这个镜像,避免编译过程由于拉取镜像过长导致失败。下面是我拉取好的基础镜像详情,根据当前编译环境不同,镜像的tag可能不同。感兴趣的可以去gcr一探究竟。

2. 务必保证所在编译环境(如Linux虚拟机)的内存至少是8G,我测试下来,不满足一定会无法编译成功。

「编译+打包」一条龙服务

如果满足了上面的注意事项,基本就能获得与下面类似的成功结果:

# set ur own GOPATH

> export GOPATH=/root/gop/

> cd /gop/src/k8s.io/kubernetes

> make quick-release

+++ [0716 00:33:15] Verifying Prerequisites....

+++ [0716 00:33:15] Building Docker image kube-build:build-7c7cb888ce-5-v1.12.12-1

+++ [0716 00:33:38] Creating data container kube-build-data-7c7cb888ce-5-v1.12.12-1

+++ [0716 00:33:42] Syncing sources to container

+++ [0716 00:33:50] Running build command...

+++ [0716 00:33:58] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/deepcopy-gen

+++ [0716 00:34:05] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/defaulter-gen

+++ [0716 00:34:10] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/conversion-gen

+++ [0716 00:34:19] Building go targets for linux/amd64:

./vendor/k8s.io/kube-openapi/cmd/openapi-gen

+++ [0716 00:34:28] Building go targets for linux/amd64:

./vendor/github.com/jteeuwen/go-bindata/go-bindata

+++ [0716 00:34:29] Building go targets for linux/amd64:

cmd/kube-proxy

cmd/kube-apiserver

cmd/kube-controller-manager

cmd/cloud-controller-manager

cmd/kubelet

cmd/kubeadm

cmd/hyperkube

cmd/kube-scheduler

vendor/k8s.io/apiextensions-apiserver

cluster/gce/gci/mounter

+++ [0716 00:39:38] Building go targets for linux/amd64:

cmd/kube-proxy

cmd/kubeadm

cmd/kubelet

+++ [0716 00:40:02] Building go targets for linux/amd64:

cmd/kubectl

+++ [0716 00:40:15] Building go targets for linux/amd64:

cmd/gendocs

cmd/genkubedocs

cmd/genman

cmd/genyaml

cmd/genswaggertypedocs

cmd/linkcheck

vendor/github.com/onsi/ginkgo/ginkgo

test/e2e/e2e.test

+++ [0716 00:42:45] Building go targets for linux/amd64:

cmd/kubemark

vendor/github.com/onsi/ginkgo/ginkgo

test/e2e_node/e2e_node.test

+++ [0716 00:43:39] Syncing out of container

+++ [0716 00:43:50] Building tarball: manifests

+++ [0716 00:43:50] Building tarball: src

+++ [0716 00:43:50] Starting tarball: client linux-amd64

+++ [0716 00:43:50] Waiting on tarballs

+++ [0716 00:43:59] Building images: linux-amd64

+++ [0716 00:43:59] Building tarball: node linux-amd64

+++ [0716 00:44:00] Starting docker build for image: cloud-controller-manager-amd64

+++ [0716 00:44:00] Starting docker build for image: kube-apiserver-amd64

+++ [0716 00:44:00] Starting docker build for image: kube-controller-manager-amd64

+++ [0716 00:44:00] Starting docker build for image: kube-scheduler-amd64

+++ [0716 00:44:00] Starting docker build for image: kube-proxy-amd64

+++ [0716 00:44:00] Building hyperkube image for arch: amd64

+++ [0716 00:44:00] Building conformance image for arch: amd64

+++ [0716 00:44:22] Deleting docker image k8s.gcr.io/kube-scheduler:v1.14.11-beta.1.2_c8b135d0b49c44-dirty

+++ [0716 00:44:23] Deleting docker image k8s.gcr.io/kube-proxy:v1.14.11-beta.1.2_c8b135d0b49c44-dirty

+++ [0716 00:44:27] Deleting docker image k8s.gcr.io/cloud-controller-manager:v1.14.11-beta.1.2_c8b135d0b49c44-dirty

+++ [0716 00:44:27] Deleting docker image k8s.gcr.io/kube-controller-manager:v1.14.11-beta.1.2_c8b135d0b49c44-dirty

+++ [0716 00:44:27] Deleting docker image k8s.gcr.io/kube-apiserver:v1.14.11-beta.1.2_c8b135d0b49c44-dirty

+++ [0716 00:44:42] Deleting conformance image k8s.gcr.io/conformance-amd64:v1.14.11-beta.1.2_c8b135d0b49c44-dirty

+++ [0716 00:44:43] Deleting hyperkube image k8s.gcr.io/hyperkube-amd64:v1.14.11-beta.1.2_c8b135d0b49c44-dirty

+++ [0716 00:44:43] Docker builds done

+++ [0716 00:44:43] Building tarball: server linux-amd64

+++ [0716 00:45:51] Building tarball: final

+++ [0716 00:45:52] Waiting on test tarballs

+++ [0716 00:45:52] Starting tarball: test linux-amd64

+++ [0716 00:46:30] Building tarball: test portable

+++ [0716 00:46:30] Building tarball: test mondo (deprecated by KEP sig-testing/20190118-breaking-apart-the-kubernetes-test-tarball)

###

# list all the generated folders

###

> cd ~/gop/src/k8s.io/kubernetes/_output# ls -lh

total 20K

drwxr-xr-x 4 root root 4.0K Jul 16 00:34 dockerized

drwxr-xr-x 3 root root 4.0K Jul 16 00:33 images

drwxr-xr-x 3 root root 4.0K Jul 16 00:44 release-images

drwxr-xr-x 8 root root 4.0K Jul 16 00:45 release-stage

drwxr-xr-x 2 root root 4.0K Jul 16 00:46 release-tars

###

# list all the generated tar files which contains all k8s components

###

> cd ~/gop/src/k8s.io/kubernetes/_output/release-tars# ls -lh

total 1005M

-rw-r--r-- 1 root root 13M Jul 15 18:41 kubernetes-client-linux-amd64.tar.gz

-rw-r--r-- 1 root root 72K Jul 15 18:41 kubernetes-manifests.tar.gz

-rw-r--r-- 1 root root 94M Jul 15 18:42 kubernetes-node-linux-amd64.tar.gz

-rw-r--r-- 1 root root 430M Jul 15 18:46 kubernetes-server-linux-amd64.tar.gz

-rw-r--r-- 1 root root 28M Jul 15 18:41 kubernetes-src.tar.gz

-rw-r--r-- 1 root root 221M Jul 15 18:46 kubernetes-test-linux-amd64.tar.gz

-rw-r--r-- 1 root root 190K Jul 15 18:46 kubernetes-test-portable.tar.gz

-rw-r--r-- 1 root root 221M Jul 15 18:47 kubernetes-test.tar.gz

-rw-r--r-- 1 root root 624K Jul 15 18:46 kubernetes.tar.gz

###

# list all the generated container images which contains all k8s components

###

root@k8scc-ubuntubox:~/gop/src/k8s.io/kubernetes/_output/release-images/amd64# ls -lh

total 1.8G

-rw-r--r-- 2 root root 138M Jul 15 18:42 cloud-controller-manager.tar

-rw-r--r-- 1 root root 566M Jul 15 18:45 conformance-amd64.tar

-rw-r--r-- 1 root root 584M Jul 15 18:45 hyperkube-amd64.tar

-rw-r--r-- 2 root root 202M Jul 15 18:42 kube-apiserver.tar

-rw-r--r-- 2 root root 152M Jul 15 18:42 kube-controller-manager.tar

-rw-r--r-- 2 root root 81M Jul 15 18:42 kube-proxy.tar

-rw-r--r-- 2 root root 80M Jul 15 18:42 kube-scheduler.tar

从结果对比原生编译和Docker编译

试过了两种不同编译方式,来说说感受:

- 原生编译后的结果

- 是raw binary,支持编译单个组件,适合即时测试。

- 如果你是

make all,当前的系统资源最好是8G以上内存

- Docker编译后的结果

- 已经帮你打包好二进制和容器镜像,适合直接分发

- 编译过程需要占用更多系统资源,当前的系统资源务必是8G以上内存

- 使用默认的Docker编译命令

make quick-release生成的文件会占用很多的磁盘空间(近8G)

> cd ~/gop/src/k8s.io/kubernetes/_output > du -smh dockerized/ images/ release-images/ release-stage/ release-tars/ 1.9G dockerized/ 24K images/ 1.8G release-images/ 3.1G release-stage/ 1005M release-tars/

因此,如果你是自己测试玩玩,推荐使用原生编译方法;如果是准备做编译分发的,推荐使用Docker编译方法。